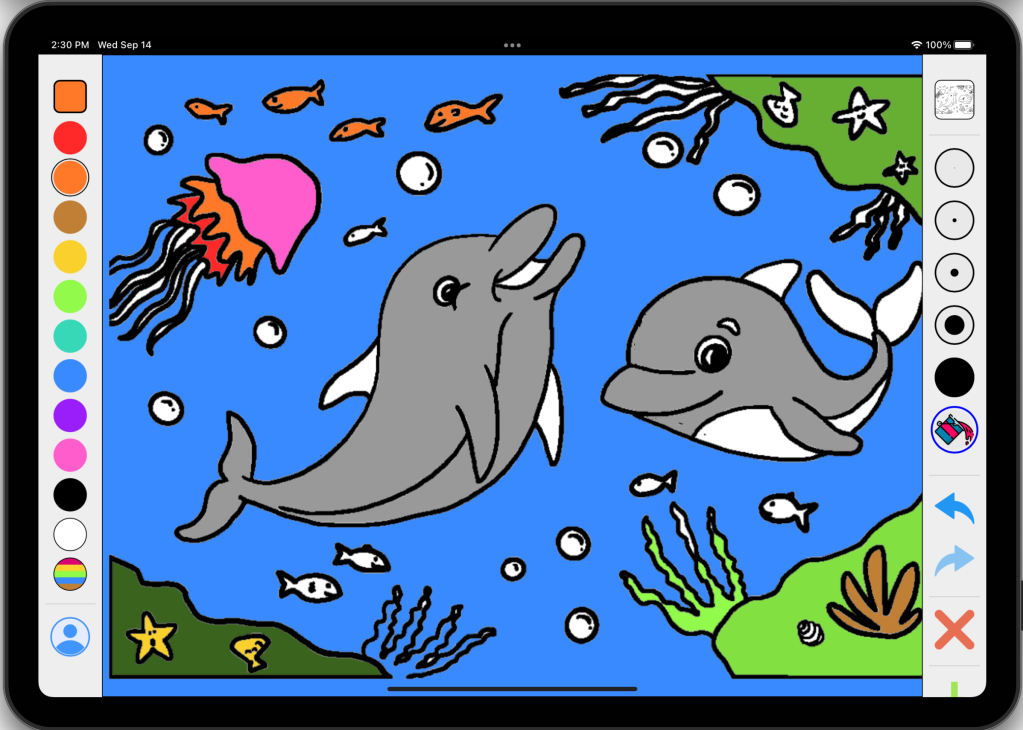

Over the past couple of years I’ve been building KidzFun.art, an art & education app for my young kids and hopefully yours. The first feature I ever added was simple colouring pages, hand drawn by my lovely and talented wife. However that was a slow and laborious process, and with the advances in AI since I began, I decided to add the ability for young children to generate a near infinite number of fun, age-appropriate, colouring pages using AI.

I chose to use Open AI’s Dall-E for this, partly as an excuse to use their SDK in production, which is generated by a fantastic company I’m advising called Stainless.

There were four main things required in order to ship this feature:

- Use the Open AI Node SDK to generate the image. This turned out to be by far the simplest, hat tip to Stainless and the Open AI team

- Build a UI suitable for young children that gave them the ability to easily create an infinite number of colouring pages, while ensuring that the generated content is age-appropriate.

- Allow parents (but not their kids) to pay for the Open AI costs, so I don’t go broke.

- Cache as much generated content as is feasible to minimize the costs

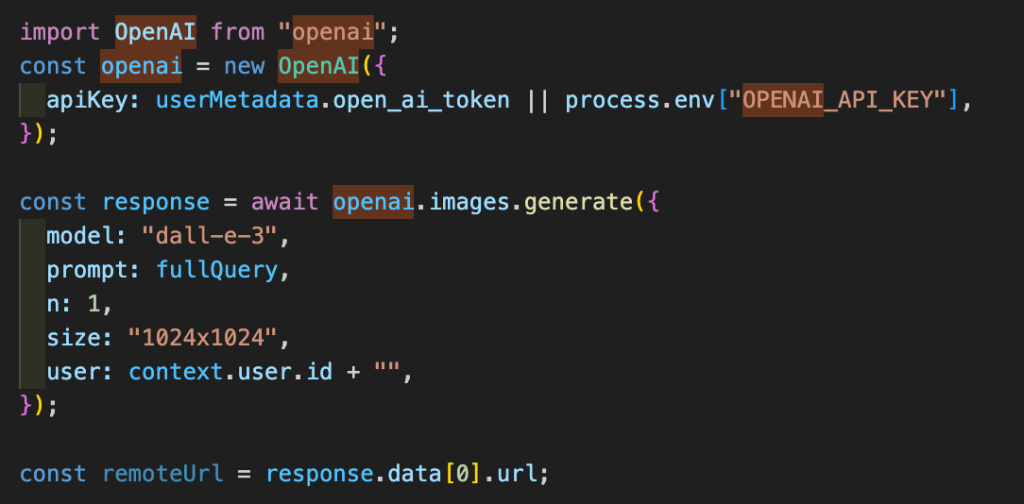

Using the OpenAI Node SDK

This part was trivial. I created a new NextJS API endpoint that accepted a query parameter, created the OpenAI object using either an API token provided by the user (more on that later), or the default one on my account.

Then simply call the openai.images.generate function and after a few seconds (it’s not particularly fast) it returns you an array of URLs, in my case just one.

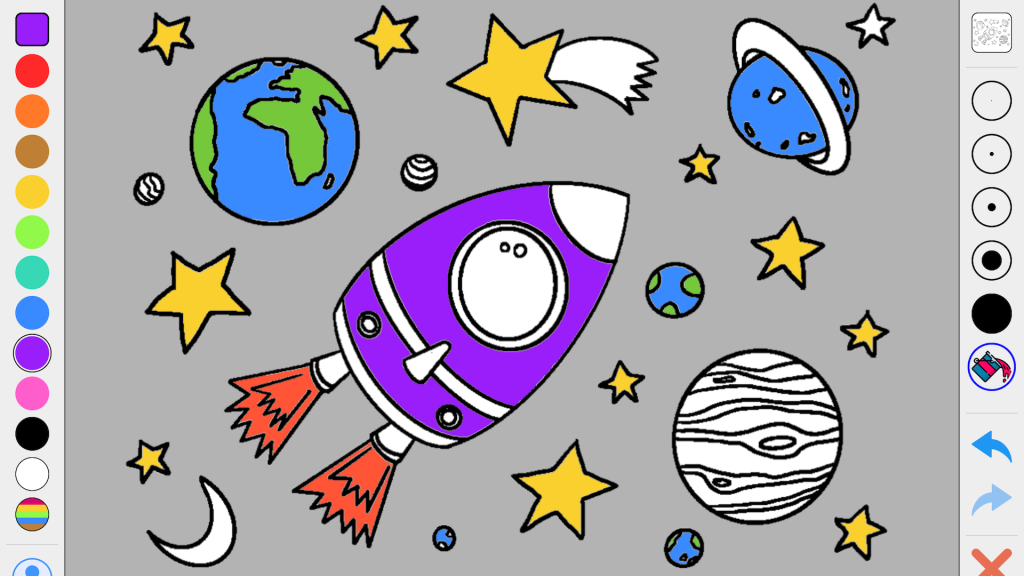

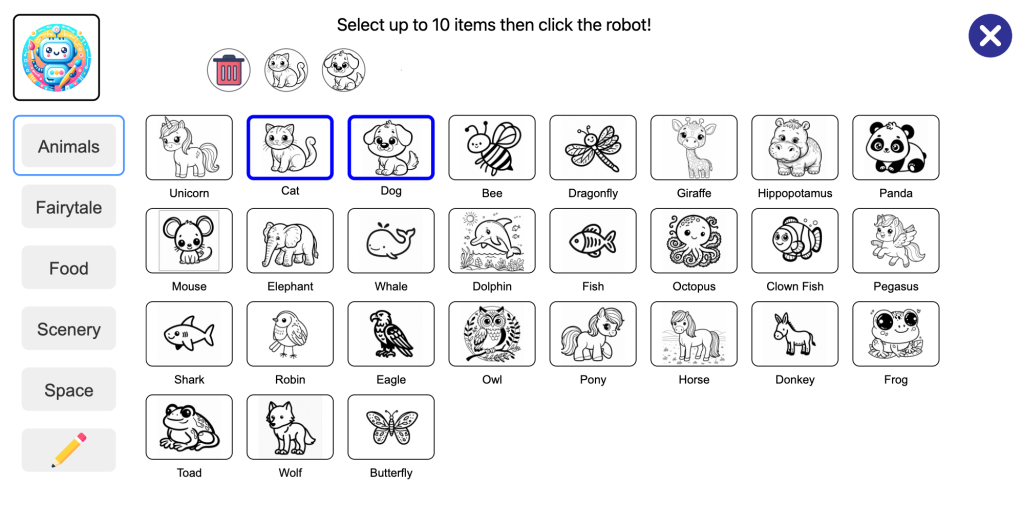

A UI suitable and safe for young kids

To make the UI simple, I created a tabbed UI that let kids selected up to ten things to put into the colouring page. When testing this with my 5 and 7 year olds, they found it intuitive – you can’t beat having your users living in your house!

Some older kids may want more control, so I also allowed them to type in the image description manually, using the pencil icon you see in the image above.

To ensure the images are safe, I add a number of instructions on the server side to instruct OpenAI to only generate age appropriate content.

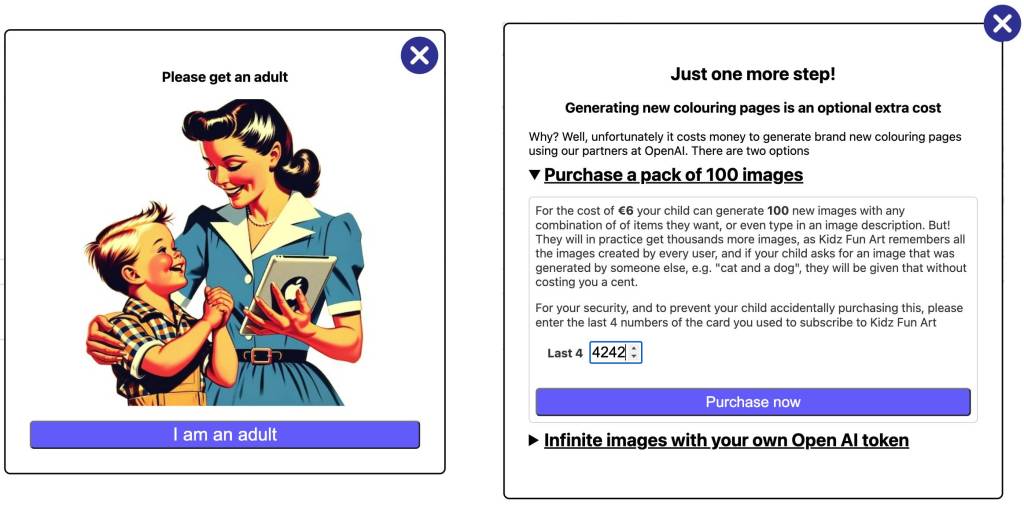

Simple payment for adults only

Using Dall-E costs money, and so it’s necessary for users to pay for this. I kept it simple, allowing parents to buy a pack of 100 image generations at a time, which is likely to last a long long time (see later for why).

You never want to be in a situation as an app developer where a child accidentally spends their parents money. To prevent this, the parent must provide the last four digits of their credit card. I use Stripe for payments, and users of the AI generation feature must already be subscribers, so I have a record of their credit card and I can simply match against that.

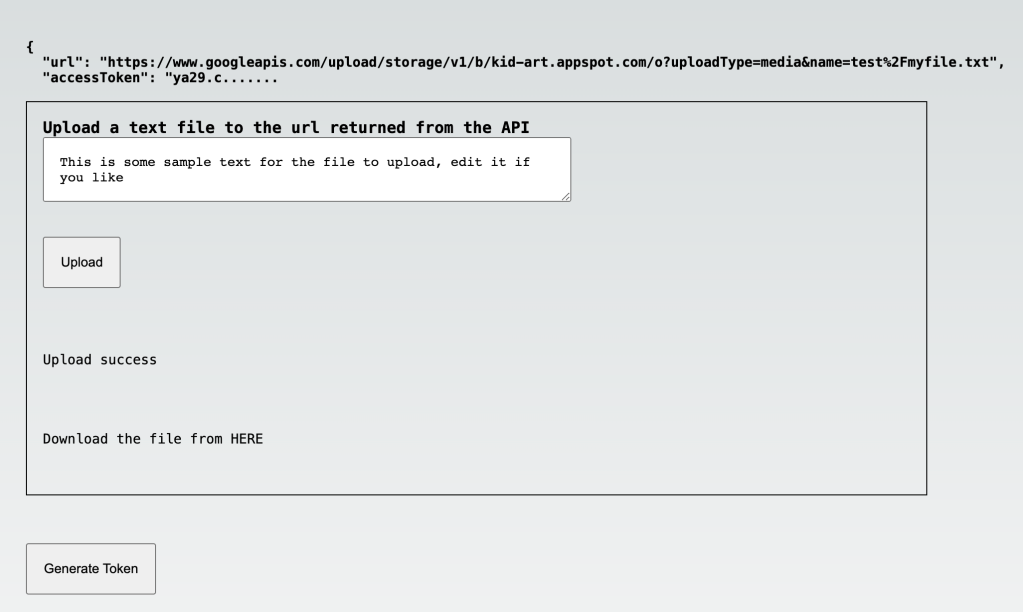

Of course, if you’re technically minded and want full control over the spending and budget, you can generate your own Open AI API key and provide that instead of purchasing a pack of pictures.

Caching to minimize costs

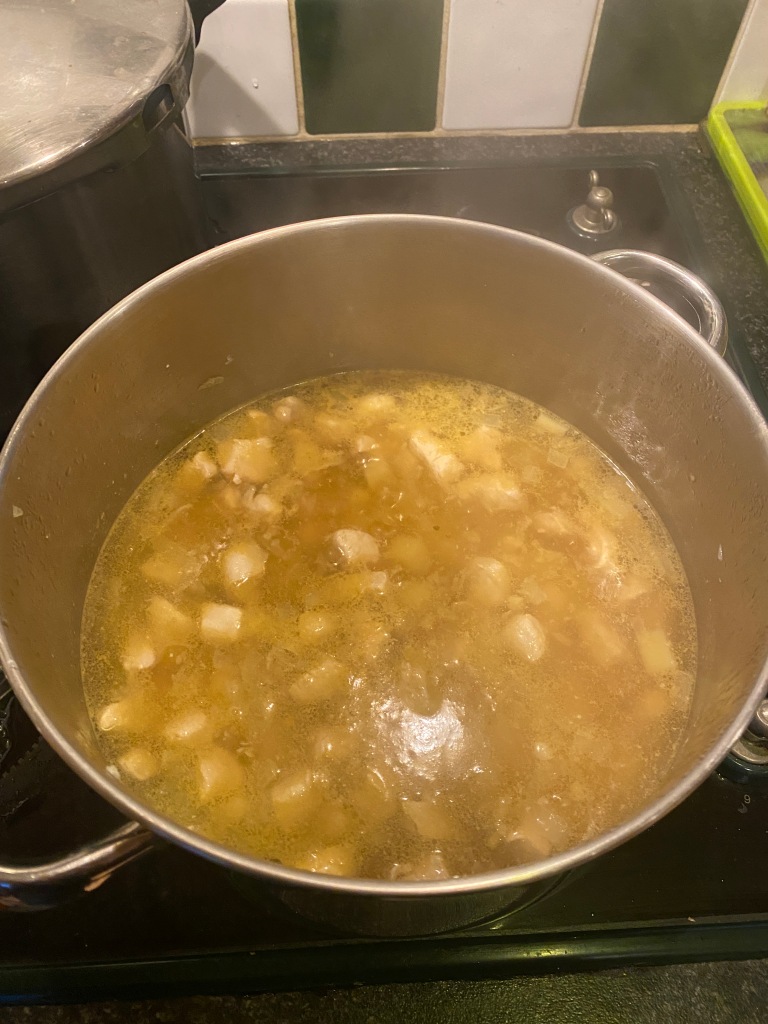

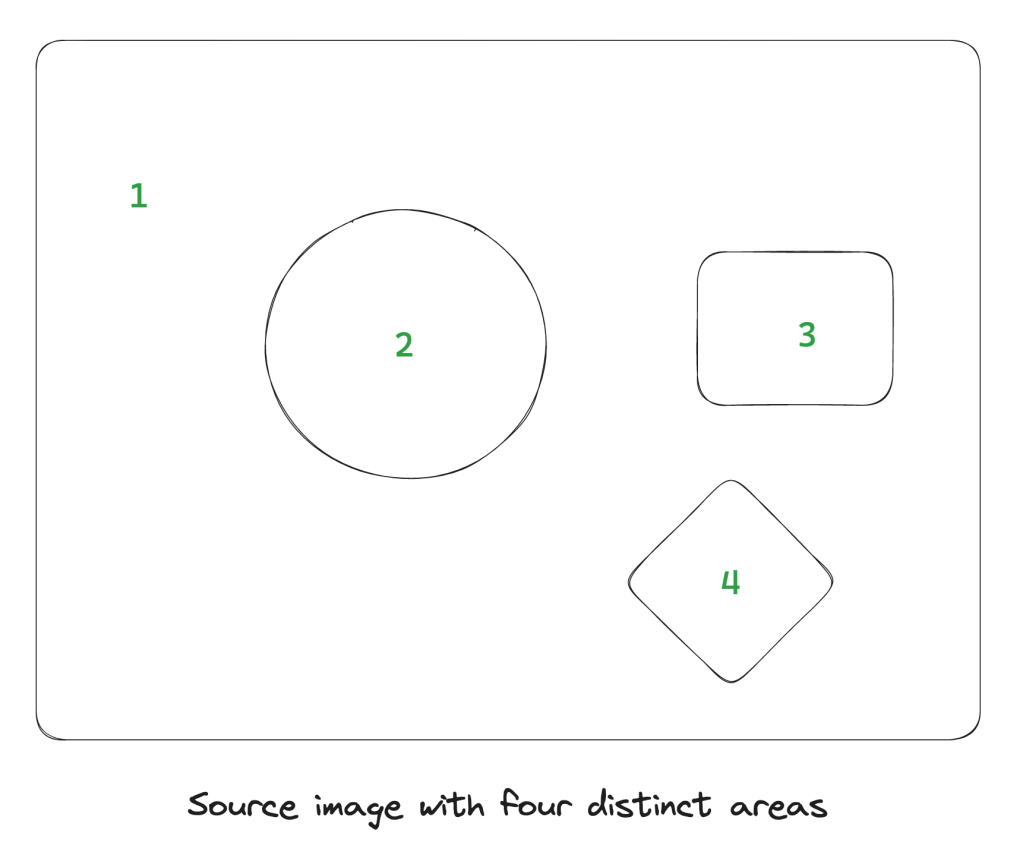

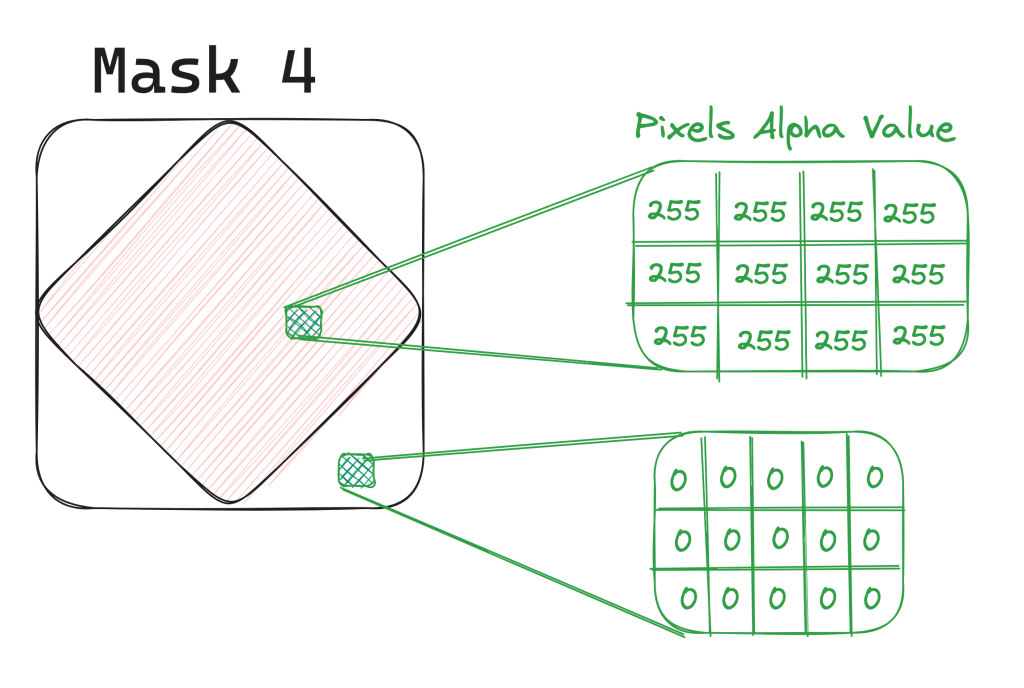

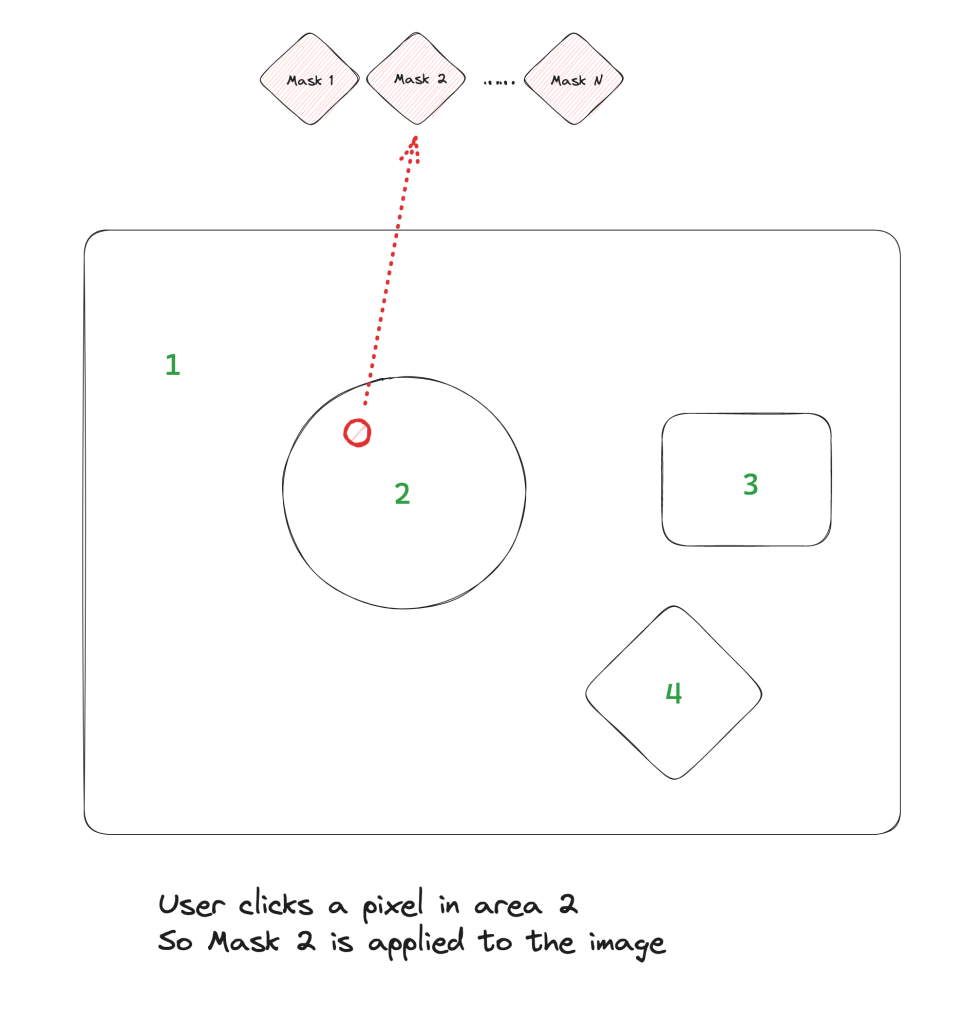

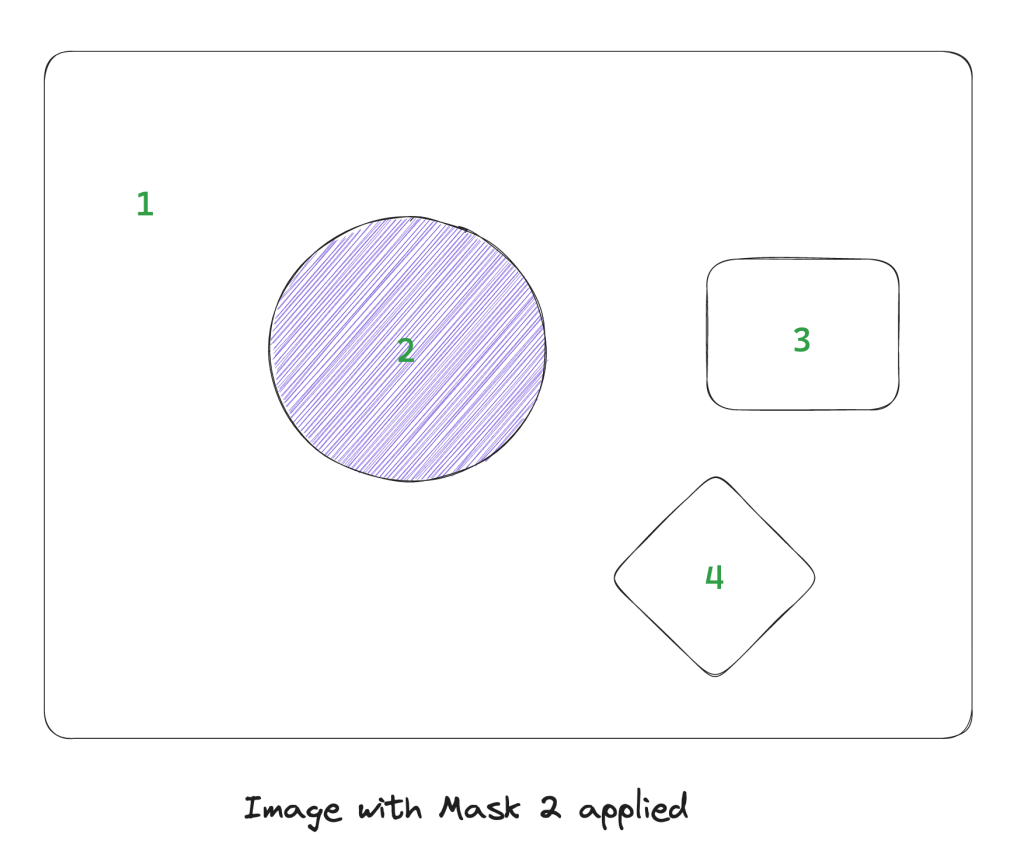

A large benefit of providing pre-determined items to place in the picture to kids, rather than free text, is that it is likely that there will be many similar requests. I use this to cut down on generation costs. I use Firebase and Google Storage on the back end, and every time that a child accepts a generated image, I cache both a large and a small version of the image in Google Storage, and make a record of it in Firebase, noting the “tokens” associated with it, e.g. “dog, cat, classroom”.

The next time a child selects “dog, cat, classroom”, they will be shown the cached image first, without subtracting from their count of purchased image generations. It’s only once the child rejects all the cached images that they cause a new image to be generated with Dall-E and subtracts from their pre-purchased allocation. In this way, as more children use the feature, it will take longer and longer for the purchased allocation to run out.

For the sake of safety, only images generated by selecting the provided tokens are cached. If a user writes in free form text that image is never cached nor shown to anyone else. They simply download it and colour it in.

That’s all folks!

Go try out KidzFun.art today on any tablet, laptop or desktop!